Blog

The Basics of Autonomous Vehicles, Part I: Artificial Intelligence

Fish & Richardson

Authors

-

- Name

- Person title

- Principal

Authors: Phillip Goter and Joseph Herriges

Two of the most exciting emerging technologies over the past few years have been artificial intelligence (AI) and autonomous vehicles (AVs). And the application of AI to achieve vehicle autonomy holds tremendous potential. While there is a great deal of excitement surrounding the promise of AI to solve AV issues, the concept of AI can be nebulous, as it is an umbrella term that encompasses many different technologies. In this article, we will provide an overview of AI technologies in use in the AV space and a few ways AI could be used to power AVs.

What Is Artificial Intelligence?

Formally, artificial intelligence (AI) refers to "machines that respond to stimulation consistent with traditional responses from humans, given the human capacity for contemplation, judgment, and intention."1 In practice, it refers to the science of making machines that mimic human perception and response. AI systems typically demonstrate some of the behaviors of human cognition, such as planning, learning, categorizing, problem-solving, and recognizing patterns. AI relies on several enabling technologies to function, many of which have become buzzwords in themselves, such as "machine learning," "deep learning," and "neural networks." At their core, these technologies rely on "big data"—they require an internal or external system that evaluates large amounts of data, identifies patterns in it, and determines the significance of the patterns in order to draw inferences, arrive at conclusions, and respond—much the same way the human brain works.

Machine Learning

Generally, machine learning refers to the process by which computers learn from data so they can perform tasks without being specifically programmed to do so. It can be either "supervised learning," which requires a human first to input data and then tell the system something about the expected output, or "unsupervised learning," in which the machine learns from the data itself and arrives at conclusions without an expected output. A basic form of machine learning is image recognition, wherein a machine is able to categorize images into groups based on the content of the images.

Neural Networks

A neural network is a type of machine learning system that processes information between interconnected nodes (similar to neurons in the human brain) to find patterns, establish connections, and derive meaning from data. For example, consider an algorithm that a neural network would use to identify pictures of cats. An engineer first would create a data set of millions of pictures of different animals. The engineer then would go through all of those images and label them either as "cat" or "not cat." Once the data was classified, the engineer would prime the neural network by telling it what some of the images are cat or not cat. The neural network then would be allowed to run through the data set on its own to attempt to classify each image. When it is done, the engineer would tell it whether its classification of each picture was correct. This process then would be run again repeatedly until the neural network achieved near 100 percent accuracy.

Deep Learning

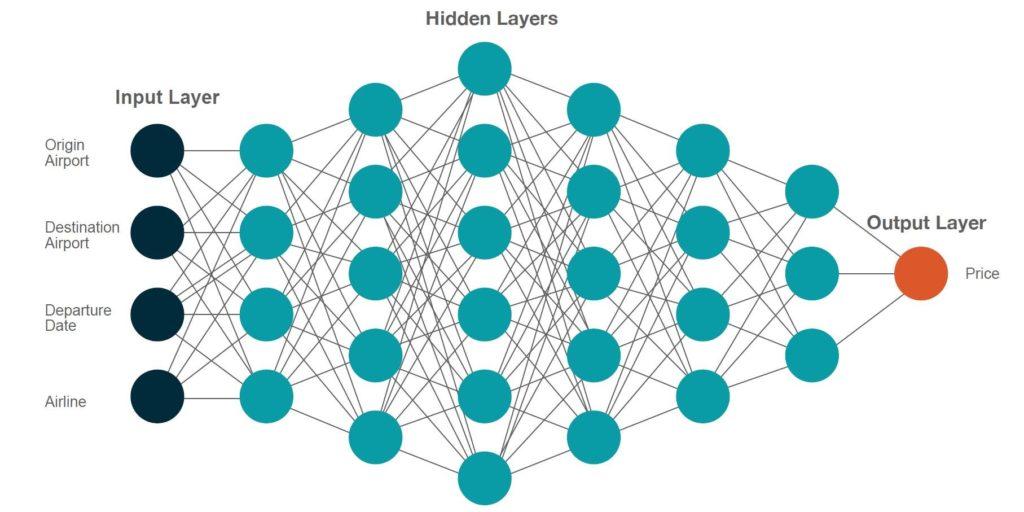

Deep learning is an advanced type of machine learning wherein a large system of neural networks analyzes vast amounts of data and arrives at conclusions without first being trained by a human. An example of deep learning would be a predictive algorithm that estimates the price of a plane ticket based on the input data of (1) origin airport, (2) destination airport, (3) departure date, and (4) airline. This input data is known as an "input layer." The data from the input layer then is run through a set of "hidden layers" that perform complex mathematical calculations on the input data to arrive at a conclusion, known as the "output layer"—in this case, the ticket price (see Figure 1).

Figure 1: Deep learning input layer, hidden layers, and output layer2

This type of deep learning is unsupervised learning because the machine performs calculations on the data itself in its hidden layers and outputs a result that was not predetermined.

The Origins of AI Technology

AI has been around longer than most would imagine. Alan Turing, the celebrated British World War II codebreaker, is credited with originating the concept in 1950 when speculating about "thinking machines" that could function similarly to the human brain.3 Turing proposed that a computer can be said to be "intelligent" if it can mimic human responses to questions to such an extent that an observer would not be able to tell whether he or she were talking to a human or a machine. This became known as the "Turing Test."

The term "artificial intelligence" was first coined by John McCarthy, widely considered the "father of artificial intelligence," at a conference in 1956. To McCarthy, a computer was intelligent when it could do things that, when done by humans, would be said to involve intelligence. Early successes included Allen Newell and Herbert Simon's General Problem Solver and Joseph Weizenbaum's ELIZA. Despite these early successes and enthusiasm about AI, the field encountered technology barriers. The limitations of storage capacity necessary to store large amounts of data and microprocessor computational power slowed the development of AI technologies throughout much of the 1970s. By the 1990s, however, many of the early goals of AI became attainable due to advances in computing technology, exemplified by IBM's Deep Blue system's defeat of the reigning world chess champion and grandmaster Gary Kasparov in 1997. Advancements in AI have continued to accompany advancements in computer technologies, as computers have become faster, cheaper, more efficient, and more capable of storing the large amounts of data necessary to enable AI.

At present, AI is being deployed across a wide range of industries, with new applications being discovered all the time. In the consumer sector, Siri, Alexa, and other voice-enabled personal assistants utilize natural language processing to understand and respond to users' vocal prompts. Streaming music and video services like Spotify and Netflix also use machine learning to enable their recommender systems that identify the types of music and movies users enjoy and then recommend new content based on this identification. In the financial field, AI is being used increasingly in loan underwriting to consider more variables than traditional underwriting models, which proponents say helps them make more accurate lending decisions and decrease the risks of default. In health care, it is showing promise in diagnosing certain medical conditions by evaluating hundreds or even thousands of medical images to detect the presence of anomalies.

How AI Powers AVs

AI in the context of AVs focuses on the perception of environment and automated responses to that environment while executing the primary goal of getting the vehicle safely from origin to destination. Below, we discuss two functions of AI in the context of AVs.

Image and Pattern Recognition

AVs must be able to "see" the world around them to travel safely from point A to point B. To do so, they must be able to recognize all of the elements that collectively make up the transportation system, including other vehicles, pedestrians, and cyclists as well as the vehicle's environment, such as roadway infrastructure, buildings, intersection controls, signs, pavement markings, and weather conditions. The safe operation of an AV requires connectivity between the vehicle and other elements of the transportation system. Engineers have identified five key types of connectivity:

- V2I: Vehicle to infrastructure (roads, traffic lights, mile markers, etc.)

- V2V: Vehicle to vehicle (other AVs)

- V2C: Vehicle to cloud (data to power navigation systems, entertainment systems, etc.)

- V2P: Vehicle to pedestrian (passive communication from pedestrian to the AV)

- V2X: Vehicle to everything (bicycles, buildings, trees, etc.)

A common application of AI technology in the AV industry is the use of neural networks to train AVs to recognize individual elements of the transportation system. Engineers feed the network hundreds of thousands of images—such as stop signs, yield signs, speed limit signs, road markings, etc.—to train it to recognize them in the field.

In addition to cameras, AVs use several different sensing technologies that allow them to perceive objects in the field. Two of the most popular sensing technologies for this purpose are RADAR and LiDAR. RADAR (Radio Detection and Ranging) works by emitting radio waves in pulses. Once those pulses hit an object, they return to the sensor, providing data on the object's location, distance, speed, and direction of movement. LiDAR (Light Detection and Ranging) works similarly by firing thousands of laser beams in all directions and measuring the amount of time it takes for the beams to return. The signals the beams emit create "point clouds" that represent objects surrounding the vehicle.4 By emitting thousands of beams per second, LiDAR sensors can create an incredibly detailed 3D map of the world around them in real time. The sensor inputs from the AV's RADAR and LiDAR systems are then fed into a centralized AI computer in a process known as "sensor fusion," making it possible for the vehicle to combine many points of sensor data such as shape, speed, and distance.

Automated Decision Making

Besides recognizing elements in the transportation system, AVs also must be able to make safe driving decisions based on their accurate perception of real-life traffic conditions. But simple, manual instructions such as "stop when you see red" are not enough; AV decision-making is powered by expert systems—AI software that attempts to mimic the decision-making expertise of an experienced driver.5

Expert systems work by pairing a knowledge base with an inference engine. A knowledge base is a collection of data, information, and past experiences relevant to the task at hand, and it contains both factual knowledge (information widely accepted by human experts in the field) and heuristic knowledge (practice, judgment, evaluation, and guesses). The inference engine uses the information contained in the knowledge base to arrive at solutions to problems. It does so by:

- Applying rules repeatedly to the facts, which are obtained from earlier rule application

- Adding new knowledge to the knowledge base when it is acquired

- Resolving conflicts when multiple rules are applicable to a particular case

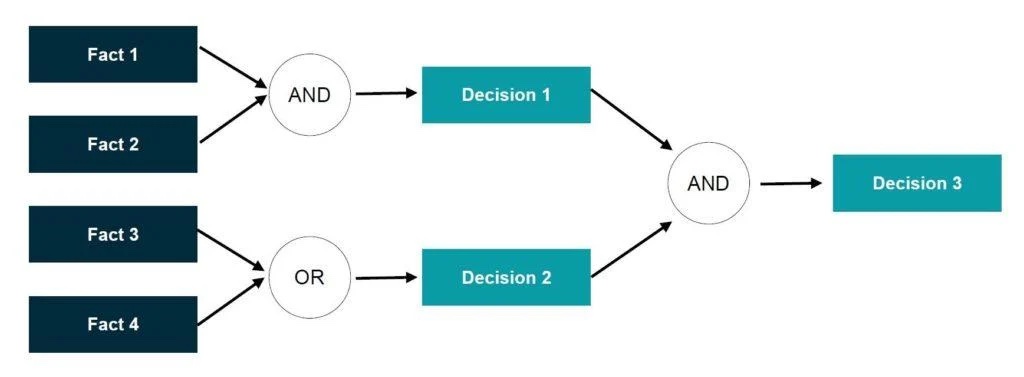

To recommend a solution, the inference engine uses both forward chaining and backward chaining. Forward chaining answers the question "What can happen next?" and involves identifying the facts, following a chain of conditions and decisions, and arriving at a solution (see Figure 2).

Figure 2: Forward Chaining (source)

Backward chaining answers the question "Why did this happen?" and requires the inference engine to identify which conditions caused a particular result (see Figure 3).

Figure 3: Backward Chaining (source)

In the context of AVs, factual knowledge consists of the rules of the road as well as the procedures for operating the vehicle. Heuristic knowledge consists of the collective past experiences of experienced drivers that inform their decision making for example, understanding that there are ice patches on the road and consequently reducing speed and allowing increased stopping distance.

Conclusion

AVs hold great promise. From automating supply chains to eliminating drunk driving accidents to transforming urban land use patterns, AVs have the potential to fundamentally change the way we live and work. But many of the technologies that enable AVs are still in their infancy and will require continued research and development before AVs can be rolled out on a widespread basis. While these efforts likely will continue at breakneck speed, the legal and regulatory landscape surrounding AVs is already struggling to catch up. In Part II, we will focus on the legal challenges and opportunities facing the AV industry.

The opinions expressed are those of the authors on the date noted above and do not necessarily reflect the views of Fish & Richardson P.C., any other of its lawyers, its clients, or any of its or their respective affiliates. This post is for general information purposes only and is not intended to be and should not be taken as legal advice. No attorney-client relationship is formed.